Warehouse Importer

Reach out to us to retrieve your API secret

In the end, warehouses look like one another. They all have tables, columns, users and queries. So we've defined a format, and as long as you follow, we'll load your metadata into Catalog.

You just need to fill in the 7 files below and push them to our endpoint using the Catalog Uploader

All 7 files are mandatory and data must make sense.

If you add a column in the column file, but the table that contains it is not in the table file then it will fail to load into Catalog

Please always prefix the files name with a unix timestamp.

CSV formatting

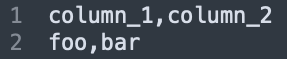

Here's an example of a very simple CSV file:

Some fields such as tags are typed as list[string]

In that case, several formats are accepted:

- list "['a', 'b']"

- tuples "('a', 'b')"

- sets "{'a', 'b', 'c'}"

Empty list allowed: []

Singleton allowed: 'a'

Multiple types allowed: "['foo', 100, 19.8]"

Forbidden characters

- Column separator is the coma

, - Row separator is the carriage return

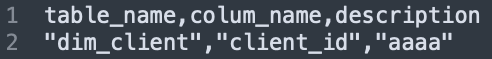

Quoting

Most of string fields (table names, column names, etc.) should not contain commas or carriage returns. Generally the problem comes with large text fields, such as SQL queries or descriptions.

If you have any doubts, you can quote all your text fields:

Files

🔑 Primary Key (must be unique)

🔐 Foreign Key (must reference an existing entry)

❓Optional (empty string in the CSV)

1. Database

database.csv

Fields

id string 🔑

database_name string

2. Schema

schema (3).csv

Fields

id string 🔑

database_id string → database.id 🔐

schema_name string

description string ❓

tags list[string] ❓

3. Table

table (5).csv

Fields

id string 🔑

schema_id string → schema.id 🔐

table_name string

description string ❓

tags list[string] ❓

type enum {TABLE | VIEW | EXTERNAL | TOPIC}

owner_external_id string → user.id ❓

4. Column

column (1).csv

Fields

id string 🔑

table_id string → table.id 🔐

column_name string

description string ❓

data_type enum: { BOOLEAN | INTEGER | FLOAT | STRING | ... | CUSTOM }

ordinal_position positive integer ❓

5. Query

query (7).csv

If you do not want to fill up the Query file, you can simply upload it with no data (the file itself is required)

We only ingest queries that ran the previous day of the ingestion of your metadata. Please make sure to only include these in the file, others will be ignored.

Fields

query_id string → query.id

database_id string → database.id 🔐

database_name string → database.name

schema_name string → schema.name

query_text string

user_id string → user.id 🔐

user_name string → user name

start_time timestamp

end_time timestamp ❓

6. View DDL

view_ddl (7).csv

If you do not want to fill up the View DDL file, you can simply upload it with no data (the file itself is required)

Fields

database_name string

schema_name string

view_name string

view_definition string

7. User

user.csv

If you do not want to fill up the User file, you can simply upload it with no data (the file itself is required)

Fields

id string 🔑

email string ❓

first_name string ❓

last_name string ❓

Lineage

We compute lineage for your integration by analysing and parsing the Queries and View DDL when possible.

Alternatively, you can complete the following lineage mapping for Tables and/or Columns and we will ingest them during each update.

1. Table Lineage

external_table_lineage.csv

Fields

parent_path string 🔑: path of the parent table

child_path string 🔑: path of the child table

2. Column Lineage

external_column_lineage.csv

Fields

parent_path string 🔑: path of the parent column

child_path string 🔑: path of the child column