Understanding Node Run Order in Coalesce

In Coalesce, the order in which Nodes execute is determined by their dependencies. A Node will only run after all the Nodes it depends on have completed. This approach ensures that data transformations occur in the correct sequence, maintaining data integrity throughout the pipeline.

To influence or modify the execution order, you can adjust the dependencies between Nodes. This can be done by specifying upstream Nodes that a particular Node relies upon. By configuring these relationships, you control the sequence in which Nodes execute.

Run Flow Example

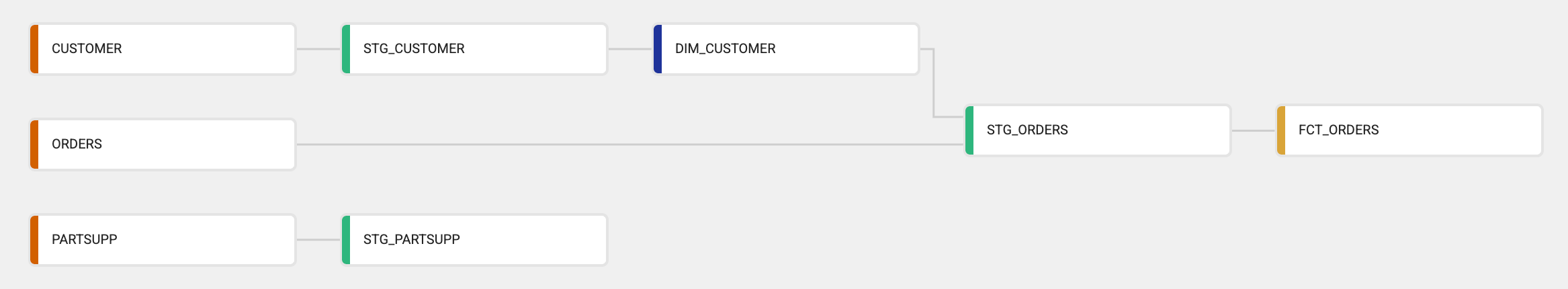

Here is an example of a run and how each Node waits for the upstream Node to finish before running.

- Source Nodes: These Nodes are raw input tables with no dependencies, and are the first to run.

CUSTOMERORDERSPARTSUPP

- Stage Node: These Nodes will run after the Source Nodes are finished.

STG_CUSTOMER(depends onCUSTOMER)STG_PARTSUPP(depends onPARTSUPP)

- Dimension Node: This Node will run after

STG_CUSTOMERis finished.DIM_CUSTOMER(depends onSTG_CUSTOMER)

- Orders Staging: This Node can't run until both

ORDERS(Source Node) andDIM_CUSTOMER(Dimension Node) are complete.STG_ORDERS(depends onORDERSandDIM_CUSTOMER)

- Final Fact Table: The final Node and only run after

STG_ORDERSis done.FCT_ORDERS(depends onSTG_ORDERS)

Ways To Control Run Order

Since runs are controlled by dependencies, you can influence the processing order or trigger specific operations by adjusting those dependencies or using features designed to manage when and how data is transformed.

Adjusting Node Dependencies

The most direct way to alter run order is by modifying the dependencies between Nodes. By ensuring that a Node depends on another, you can control the sequence in which they execute. This is typically done by specifying upstream Nodes that a particular Node relies upon. You can set this by using Ref Functions.

Marketplace Packages

We have a variety of packages that can add Node Types or functionalities that affect run order.

- Dynamic Tables Package: This package provides tools for creating, deploying, and managing dynamic tables, which can help in automating table updates and ensuring data consistency.

- Deferred Merge Package: Allows for deferring certain operations, which can be strategically used to control when specific transformations occur within the pipeline.

Creating Custom Node Types

For more granular control, you can create a custom Node Type.

- Node Definition: Specify UI elements and configurations.

- Run Template: Define how the node will process data, allowing for the inclusion of specific logic that can affect the data processing for that Node.

Join Templates

Join Templates can be used to define how joins are performed between Nodes. By specifying particular join conditions or sequences, you can control the order in which data from different nodes is combined.

capitalized: 'My Node Name'

short: 'MNN'

plural: 'My Node Names'

tagColor: '#FF5A5F'

joinTemplate: |

{%- for dep in sources[0].dependencies -%}

{%- if loop.first %} FROM {% endif -%}

{%- if not loop.first %}LEFT JOIN {% endif -%}

{{- ref_raw(dep.node.location.name, dep.node.name) }} {{ dep.node.name }}

{% if not loop.first %} ON {{ sources[0].dependencies[loop.index0].node.name }}./*COLUMN*/ = {{ sources[0].dependencies[loop.index0 - 1].node.name }}./*COLUMN*/ {%- endif %}

{% endfor -%}

WHERE 1=1

Using Pre-SQL and Post-SQL Scripts

You can run a custom SQL script before and after a Node runs.

- Pre-SQL: Executed before the main transformation, can be used to set up necessary conditions or dependencies.

- Post-SQL: Executed after the main transformation, useful for cleanup or triggering subsequent processes.

Using Macros and Transforms

You can extend and customize how Nodes behave using macros and transforms:

- Transforms: Prebuilt or custom logic blocks applied to nodes that define how data should be shaped or filtered. You can chain or modify transforms to control how data flows through the pipeline.

- Macros: Reusable snippets of SQL or logic that can be injected into Nodes, transforms, or scripts. Macros are helpful for inserting consistent logic or dynamically altering behavior at runtime.